安装

kubernetes 集群创建

部署方式为多网络多控制面,这里使用 k3s 安装两个 kubernetes 集群,安装后需执行:

1

2

3

4

5

6

7

8

9

|

# 拷贝 kubeconfig 配置,istioctl 需要

cp /etc/rancher/k3s/k3s.yaml ~/.kube/config

# 创建根证书

cd ~/test/istio-1.18.2

mkdir -p certs && cd certs

make -f ../tools/certs/Makefile.selfsigned.mk root-ca

make -f ../tools/certs/Makefile.selfsigned.mk cluster1

make -f ../tools/certs/Makefile.selfsigned.mk cluster2

# scp certs 目录到 cluster1 和 cluster2 节点

|

创建根证书和中间证书

在 cluster1 和 cluster2 集群节点上分别配置两个 istio 集群的证书:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# cluster1

kubectl create namespace istio-system

kubectl create secret generic cacerts -n istio-system \

--from-file=cluster1/ca-cert.pem \

--from-file=cluster1/ca-key.pem \

--from-file=cluster1/root-cert.pem \

--from-file=cluster1/cert-chain.pem

# cluster2

kubectl create namespace istio-system

kubectl create secret generic cacerts -n istio-system \

--from-file=cluster2/ca-cert.pem \

--from-file=cluster2/ca-key.pem \

--from-file=cluster2/root-cert.pem \

--from-file=cluster2/cert-chain.pem

|

安装 istio

IstioOperator 设置为如下,对于多网络多控制面板的多集群场景,node1 和 node2 的网络名称分别设置为 network1 和 network2,集群名称分别设置为 cluster1 和 cluster2,文件分别为 cluster1.yaml 和 cluster2.yaml。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

|

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

hub: docker.io/istio

meshConfig:

accessLogFile: /dev/stdout

accessLogEncoding: "JSON"

defaultConfig:

proxyMetadata:

ISTIO_META_DNS_CAPTURE: "true"

tag: 1.18.2

profile: demo

components:

ingressGateways:

- name: istio-eastwestgateway

label:

istio: eastwestgateway

app: istio-eastwestgateway

topology.istio.io/network: network1

enabled: true

k8s:

env:

# traffic through this gateway should be routed inside the network

- name: ISTIO_META_REQUESTED_NETWORK_VIEW

value: network1

service:

ports:

- name: status-port

port: 15021

targetPort: 15021

- name: tls

port: 15443

targetPort: 15443

- name: tls-istiod

port: 15012

targetPort: 15012

- name: tls-webhook

port: 15017

targetPort: 15017

values:

global:

logging:

level: default:debug

configValidation: true

istioNamespace: istio-system

meshID: mesh1

multiCluster:

clusterName: cluster1

network: network1

gateways:

istio-ingressgateway:

injectionTemplate: gateway

|

expose-services.yaml 配置为如下,通过该设置,该 Gateway 作为本数据面所有服务的流量入口。也就是所有服务共享单个 ingress gateway (单个 IP),这里其实是利用了 TLS 中的 SNI(Server Name Indication),可以允许服务无需配置 VirtualService,而直接使用 TLS 中的 SNI 值来表示 upstream,服务相关的 service/subset/port 都可以编码到 SNI 内容中。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: cross-network-gateway

spec:

selector:

istio: eastwestgateway

servers:

- port:

number: 15443

name: tls

protocol: TLS

tls:

mode: AUTO_PASSTHROUGH

hosts:

- "*.local"

|

执行以下命令部署 istio:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

# cluster1

istioctl install -f cluster1.yaml

kubectl apply -n istio-system -f expose-services.yaml

# 可通过以下查看安装的文件

# istioctl manifest generate -f cluster1.yaml > out_all.yaml

# 查看 external ip,这个 ip 是这台 cluster1 云主机的内网 ip

kubectl get svc istio-eastwestgateway -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

istio-eastwestgateway LoadBalancer 10.43.102.254 192.168.111.205 15021:31047/TCP...

# cluster2

istioctl install -f cluster2.yaml

kubectl apply -n istio-system -f expose-services.yaml

# 查看 external ip,这个 ip 是这台 cluster1 云主机的内网 ip

kubectl get svc istio-eastwestgateway -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

istio-eastwestgateway LoadBalancer 10.43.109.162 192.168.111.131 15021:31047/TCP...

|

在两个集群中分别设置另一个集群 api-server 的访问证书的 secret:

1

2

3

4

5

6

7

8

|

# cluster1 使用当前节点的外网 ip

istioctl x create-remote-secret --name=cluster1 --server=https://192.168.111.205:6443 > cluster1-remote-secret.yaml

# cluster2 使用当前节点的外网 ip

istioctl x create-remote-secret --name=cluster2 --server=https://192.168.111.131:6443 > cluster2-remote-secret.yaml

# cluster2

kubectl apply -f cluster1-remote-secret.yaml

# cluster1

kubectl apply -f cluster2-remote-secret.yaml

|

验证部署

分别在两个集群中部署测试的 helloworld 的 service 和 sleep 的 service:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# cluster1 and cluster2

kubectl create namespace sample

kubectl label namespace sample istio-injection=enabled

kubectl apply -f samples/helloworld/helloworld.yaml \

-l service=helloworld -n sample

kubectl apply -f samples/sleep/sleep.yaml -n sample

# cluster1

kubectl apply -f samples/helloworld/helloworld.yaml \

-l version=v1 -n sample

# cluster2

kubectl apply -f samples/helloworld/helloworld.yaml \

-l version=v2 -n sample

|

在两集群测试和查看:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

# cluster1 查看 endpoints,其中一个为另一个集群的 eastwestgateway 地址

istioctl pc endpoints -nsample "$(kubectl get pod -n sample -l app=sleep -o jsonpath='{.items[0].metadata.name}')" --cluster "outbound|5000||helloworld.sample.svc.cluster.local"

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.42.0.59:5000 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

192.168.111.131:15443 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

# cluster1 通过 curl 测试

kubectl exec -n sample -c sleep "$(kubectl get pod -n sample -l \

app=sleep -o jsonpath='{.items[0].metadata.name}')" \

-- curl helloworld.sample:5000/hello

# cluster2

istioctl pc endpoints -nsample "$(kubectl get pod -n sample -l \

app=sleep -o jsonpath='{.items[0].metadata.name}')" --cluster "outbound|5000||helloworld.sample.svc.cluster.local"

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.42.0.15:5000 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

192.168.111.205:15443 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

|

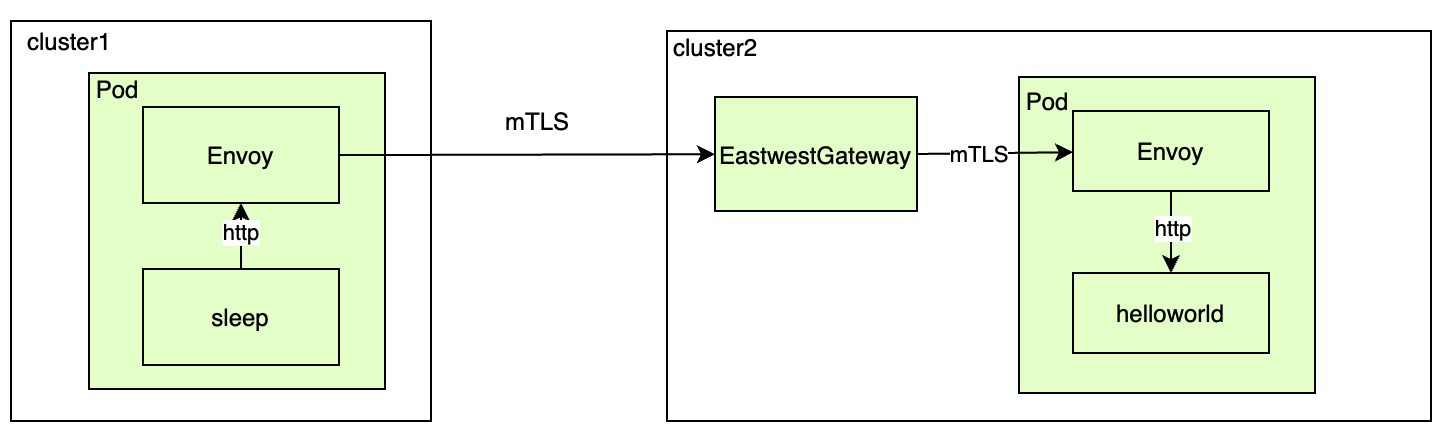

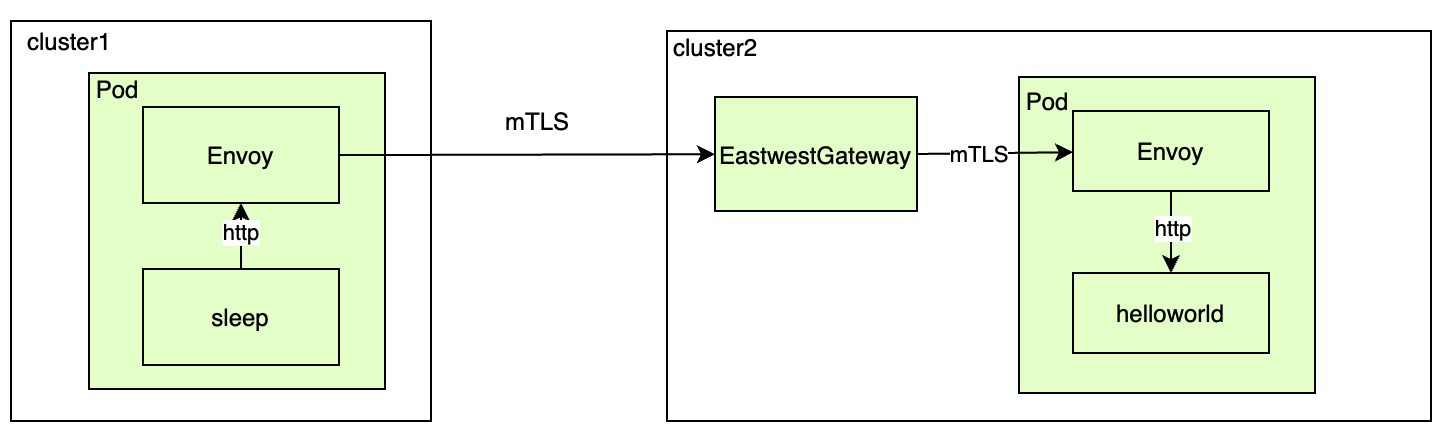

跨集群访问逻辑分析

整体请求流程图如下,pods 内部使用 http,pod 之间通过 mTLS 交互,并且会先请求到 cluster2 集群的 eastweastgateway pods,然后转发到服务 pods:

客户端 envoy 的路由和证书配置

这里以在 cluster1 集群的 sleep pod 上访问 cluster2 集群的 helloworld 服务为例,首先获取 sleep pods 的出向请求相关配置:

1

2

3

4

5

6

7

8

9

10

11

|

# 从 listener 配置得到 "routeConfigName": "5000",可以看到这里协议是 http

istioctl pc listener sleep-9454cc476-5sbrw.sample --port 5000 -ojson

# 从 route 配置得到 "cluster": "outbound|5000||helloworld.sample.svc.cluster.local"

istioctl pc route sleep-9454cc476-5sbrw.sample --name 5000 -ojson

# 获取集群配置,会表明 tls 认证配置,可以看到这里协议是 tls

istioctl pc cluster sleep-9454cc476-5sbrw.sample --fqdn "outbound|5000||helloworld.sample.svc.cluster.local" -ojson

# 获取 endpoints

istioctl pc endpoints sleep-9454cc476-5sbrw.sample --cluster "outbound|5000||helloworld.sample.svc.cluster.local"

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.42.0.59:5000 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

192.168.111.131:15443 HEALTHY OK outbound|5000||helloworld.sample.svc.cluster.local

|

此 cluster 的详细配置如下,会表明当前节点使用的 tls 证书和根证书名称:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

|

{

"cluster": {

"@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster",

"name": "outbound|5000||helloworld.sample.svc.cluster.local",

"type": "EDS",

"service_name": "outbound|5000||helloworld.sample.svc.cluster.local"

},

"transport_socket_matches": [

{

"name": "tlsMode-istio",

"match": {"tlsMode": "istio"},

"transport_socket": {

"name": "envoy.transport_sockets.tls",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext",

"common_tls_context": {

"tls_params": {

"tls_minimum_protocol_version": "TLSv1_2",

"tls_maximum_protocol_version": "TLSv1_3"

},

"alpn_protocols": [

"istio-peer-exchange",

"istio"

],

"tls_certificate_sds_secret_configs": [

{

"name": "default", // 本地证书名称

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc" // 获取根证书的 SDS 服务器即通过 pilot-agent 向 istiod 获取的

}

}

],

},

}

}

],

"combined_validation_context": {

"default_validation_context": {},

"validation_context_sds_secret_config": {

"name": "ROOTCA", // 根证书名称

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc" // 获取根证书的 SDS 服务器即通过 pilot-agent 向 istiod 获取的

}

}

],

},

}

}

}

},

"sni": "outbound_.5000_._.helloworld.sample.svc.cluster.local"

}

}

}

]

}

|

获取 cluster1 集群 sleep pod 的证书和根证书,其中 default 对应的是一个证书链,里面包含四个证书(里面包含 cluster1 集群的中间证书),ROOTCA 对应的是根证书:

1

2

3

4

5

6

7

8

9

10

11

|

# 获取证书链

istioctl proxy-config secret sleep-9454cc476-5sbrw.sample -o json | jq -r \

'.dynamicActiveSecrets[0].secret.tlsCertificate.certificateChain.inlineBytes' | base64 --decode > chain.pem

# 里面有四个证书,第一个是当前 pods 证书,第二个当前集群证书,即上面手动生成的中间证书 certs/cluster1/ca_cert.pem,

# 第三个和第四个为根证书,即上面手动生成的根证书 certs/root.pem(为啥根证书会在这记录两次??)

openssl x509 -noout -text -in chain.pem

# 获取根证书,即上面第三个四个证书

istioctl proxy-config secret sleep-9454cc476-5sbrw.sample -o json | jq -r \

'.dynamicActiveSecrets[1].secret.validationContext.trustedCa.inlineBytes' | base64 --decode > root.pem

# 查看根证书内容

openssl x509 -noout -text -in root.pem

|

东西向网关 envoy 路由和证书配置

跨集群访问时,会先访问到 cluster2 集群的 eastwestgateway pod,查看此 pod envoy 路由配置:

1

2

3

4

|

# 获取转发的 cluster

istioctl pc listener istio-eastwestgateway-765d464695-9wnbp.istio-system --port 15443 -ojson

# cluster 详细信息,标记负载为 sample 空间下的 helloworld

istioctl pc cluster istio-eastwestgateway-765d464695-9wnbp.istio-system --fqdn "outbound_.5000_._.helloworld.sample.svc.cluster.local" -ojson

|

listener 配置如下,根据服务名称自动转发到名为“outbound_.5000_._.helloworld.sample.svc.cluster.local”的 cluster,协议是 tls:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

{

"filter_chain_match": {

"application_protocols": [

"istio",

"istio-peer-exchange",

"istio-http/1.0",

"istio-http/1.1",

"istio-h2"

],

"server_names": [

"outbound_.5000_._.helloworld.sample.svc.cluster.local"

]

},

"filters": [

{

"name": "envoy.filters.network.tcp_proxy",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy",

"stat_prefix": "outbound_.5000_._.helloworld.sample.svc.cluster.local",

"cluster": "outbound_.5000_._.helloworld.sample.svc.cluster.local",

}

}

]

},

|

同样,获取 cluster2 eastwestgateway 节点的证书配置:

1

2

3

4

5

6

7

8

9

10

11

|

# 获取证书链

istioctl proxy-config secret istio-eastwestgateway-765d464695-9wnbp.istio-system -o json | jq -r \

'.dynamicActiveSecrets[0].secret.tlsCertificate.certificateChain.inlineBytes' | base64 --decode > chain.pem

# 里面有四个证书,第一个是当前 pods 证书,第二个当前集群证书,即上面手动生成的中间证书 certs/cluster2/ca_cert.pem,

# 第三个和第四个为根证书,即上面手动生成的根证书 certs/root.pem

openssl x509 -noout -text -in chain.pem

# 获取根证书,即上面第三个四个证书

istioctl proxy-config secret istio-eastwestgateway-765d464695-9wnbp.istio-system -o json | jq -r \

'.dynamicActiveSecrets[1].secret.validationContext.trustedCa.inlineBytes' | base64 --decode > root.pem

# 查看根证书内容

openssl x509 -noout -text -in root.pem

|

服务端 envoy 路由和证书配置

查看 cluster2 集群的 helloworld pods 的 envoy 配置:

1

2

3

4

|

# 从 listener 配置得到 "name": "inbound|5000||",可以看到 5000 端口的 listener 有两种,一个协议是 tls,另一个是 raw_buffer,两个都转发到 “inbound|5000||”

istioctl pc listener helloworld-v2-79d5467d55-whmmb.sample --port 15006 -ojson

# 获取集群配置

istioctl pc cluster helloworld-v2-79d5467d55-whmmb.sample --fqdn "inbound|5000||" -ojson

|

查看 listener 详细配置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

|

{

"filter_chain_match": {

"destination_port": 5000,

"transport_protocol": "tls",

},

"filters": [

{

"name": "envoy.filters.network.http_connection_manager",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager",

"route_config": {

"name": "inbound|5000||",

},

"server_name": "istio-envoy",

}

}

],

"transport_socket": {

"name": "envoy.transport_sockets.tls",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext",

"common_tls_context": {

"tls_params": {

"tls_minimum_protocol_version": "TLSv1_2",

"tls_maximum_protocol_version": "TLSv1_3",

},

"alpn_protocols": [

"h2",

"http/1.1"

],

"tls_certificate_sds_secret_configs": [

{

"name": "default", // 服务器证书名称

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc" // 获取根证书的 SDS 服务器即通过 pilot-agent 向 istiod 获取的

}

}

],

},

}

}

],

"combined_validation_context": {

"validation_context_sds_secret_config": {

"name": "ROOTCA", // 根证书名称

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc" // 获取根证书的 SDS 服务器即通过 pilot-agent 向 istiod 获取的

}

}

],

},

}

}

}

},

"require_client_certificate": true

}

},

},

|

获取 cluster2 集群 helloworld pod 的证书:

1

2

3

4

5

6

7

8

9

10

11

|

# 获取证书链

istioctl proxy-config secret helloworld-v2-79d5467d55-whmmb.sample -o json | jq -r \

'.dynamicActiveSecrets[0].secret.tlsCertificate.certificateChain.inlineBytes' | base64 --decode > chain.pem

# 里面有四个证书,第一个是当前 pods 证书,第二个当前集群证书,即上面手动生成的中间证书 certs/cluster2/ca_cert.pem,

# 第三个和第四个为根证书,即上面手动生成的根证书 certs/root.pem(为啥根证书会在这记录两次??)

openssl x509 -noout -text -in chain.pem

# 获取根证书,和上面第三个四个证书一样

istioctl proxy-config secret helloworld-v2-79d5467d55-whmmb.sample -o json | jq -r \

'.dynamicActiveSecrets[1].secret.validationContext.trustedCa.inlineBytes' | base64 --decode > root.pem

# 查看根证书内容

openssl x509 -noout -text -in root.pem

|

源码分析

从 cluster1 istiod 日志中,分别抓取当前集群初始化时的日志,和创建远程集群 secret 后连接远程集群的日志,来分析 istiod 执行了哪些代码。

当前集群的日志

在日志中找到当前集群的记录:

1

2

3

4

5

6

7

8

9

10

11

12

|

2122 2023-09-21T10:38:02.154035Z info Starting Istiod Server with primary cluster caa

2123 2023-09-21T10:38:02.154146Z info initializing Kubernetes credential reader for cluster caa

2124 2023-09-21T10:38:02.154124Z info ControlZ available at 127.0.0.1:9876

2125 2023-09-21T10:38:02.154198Z info kube Initializing Kubernetes service registry "caa"

2126 2023-09-21T10:38:02.154277Z info kube should join leader-election for cluster caa: false

2127 2023-09-21T10:38:02.154376Z info Starting multicluster remote secrets controller

2128 2023-09-21T10:38:02.154582Z info status Starting status manager

2129 2023-09-21T10:38:02.154633Z info Starting validation controller

2130 2023-09-21T10:38:02.154654Z info Starting ADS server

2131 2023-09-21T10:38:02.154666Z info Starting IstioD CA

2132 2023-09-21T10:38:02.154672Z info JWT policy is third-party-jwt

2133 2023-09-21T10:38:02.154670Z debug started queue 2npvxgc495

|

执行相关代码函数如下:

- pilot/pkg/bootstrap/server.go: NewServer

- pilot/pkg/server/instance.go: New

- pilot/pkg/bootstrap/server.go: NewServer

- pilot/pkg/bootstrap/server.go: Server.Start

- pkg/kube/multicluster/secretcontroller.go: Controller.handleAdd

- 控制器 1:pilot/pkg/credentials/kube/multicluster.go: Multicluster.ClusterAdded

- 控制器 2:pilot/pkg/serviceregistry/kube/controller/multicluster.go: Multicluster.ClusterAdded

连接远程集群的日志

创建对方 cluster 的 secret 后,日志为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

2023-09-20T10:32:01.576782Z info processing secret event for secret istio-system/istio-remote-secret-cluster2

2023-09-20T10:32:01.576819Z debug secret istio-system/istio-remote-secret-cluster2 exists in informer cache, processing it

2023-09-20T10:32:01.576830Z info Adding cluster cluster=cluster2 secret=istio-system/istio-remote-secret-cluster2

2023-09-20T10:32:01.595271Z info initializing Kubernetes credential reader for cluster cluster2

2023-09-20T10:32:01.601914Z info kube Initializing Kubernetes service registry "cluster2"

2023-09-20T10:32:01.602043Z info kube Creating WorkloadEntry only config store for cluster2

2023-09-20T10:32:01.661006Z info kube should join leader-election for cluster cluster2: false

2023-09-20T10:32:01.661036Z info finished callback for cluster and starting to sync cluster=cluster2 secret=istio-system/istio-remote-secret-cluster2

2023-09-20T10:32:01.661044Z info Number of remote clusters: 1

2023-09-20T10:32:01.661026Z debug started queue 8tvl4q7vq2

2023-09-20T10:32:01.661045Z info kube Starting Pilot K8S CRD controller controller=mc-workload-entry-controller

2023-09-20T10:32:01.661090Z info kube controller "networking.istio.io/v1alpha3/WorkloadEntry" is syncing... controller=mc-workload-entry-controller

2023-09-20T10:32:01.663156Z info kube controller "networking.istio.io/v1alpha3/WorkloadEntry" is syncing... controller=mc-workload-entry-controller

2023-09-20T10:32:01.667403Z info kube Pilot K8S CRD controller synced in 6.359402ms controller=mc-workload-entry-controller

2023-09-20T10:32:01.667423Z debug started queue xkkswrrc2d

2023-09-20T10:32:01.692274Z info kube kube controller for cluster2 synced after 31.195466ms

2023-09-20T10:32:01.692315Z debug started queue cluster2

2023-09-20T10:32:01.692740Z info model reloading network gateways

2023-09-20T10:32:01.692759Z info model gateways changed, triggering push

|

执行相关代码函数如下,可以看到逻辑十分相似:

- pkg/kube/multicluster/secretcontroller.go: Controller.processItem

- pkg/kube/multicluster/secretcontroller.go: Controller.addSecret

- pkg/kube/multicluster/secretcontroller.go: Controller.handleAdd

- 控制器 1:pilot/pkg/credentials/kube/multicluster.go: Multicluster.ClusterAdded

- 控制器 2:pilot/pkg/serviceregistry/kube/controller/multicluster.go: Multicluster.ClusterAdded

其中在 secretcontroller.handerAdd 中,会调用多个 handlers 的 ClusterAdded 函数,这些 handlers 是调用 secretcontroller.AddHandler 添加的,有以下两个地方调用了:

- pilot/pkg/bootstrap/server.go: Server.initSDSServer,对应添加的 handler 为 credentials/kube/multicluster.go 中的 Multicluster

- pilot/pkg/bootstrap/servicecontroller.go: Server.initKubeRegistry,对应添加的 handler 为 serviceregistry/kube/controller/multicluster.go 中的 Multicluster

当前集群初始化分析

其中 secretcontroller.go 中 Controller 是在服务启动时初始化的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

// pilot/pkg/bootstrap/server.go

// 当前集群启动

func (s *Server) Start(stop <-chan struct{}) error {

log.Infof("Starting Istiod Server with primary cluster %s", s.clusterID)

// ...

}

// 创建 server 里面会初始化各个控制

func NewServer(args *PilotArgs, initFuncs ...func(*Server)) (*Server, error) {

// ...

if err := s.initControllers(args); err != nil {

return nil, err

}

// ...

}

// 初始化多集群控制器和 sds 控制器

func (s *Server) initControllers(args *PilotArgs) error {

log.Info("initializing controllers")

s.initMulticluster(args)

s.initSDSServer()

// ...

}

func (s *Server) initMulticluster(args *PilotArgs) {

if s.kubeClient == nil {

return

}

// secretcontroller.go 中的 Controller 初始化

s.multiclusterController = multicluster.NewController(s.kubeClient, args.Namespace, s.clusterID, s.environment.Watcher)

s.XDSServer.ListRemoteClusters = s.multiclusterController.ListRemoteClusters

s.addStartFunc(func(stop <-chan struct{}) error {

return s.multiclusterController.Run(stop)

})

}

|

secretcontroller.go 中的多集群控制器,其逻辑主要在 Run 函数中,调用 informer 获取集群资源列表和监听集群资源变化:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

|

// pkg/kube/multicluster/secretcontroller.go

func NewController(kubeclientset kube.Client, namespace string, clusterID cluster.ID, meshWatcher mesh.Watcher) *Controller {

informerClient := kubeclientset

// 创建 Informer 来获取和监听服务网格所在命名空间下,带有 label 为 “istio/multiCluster: "true"” 的 secret 资源

secretsInformer := cache.NewSharedIndexInformer(

&cache.ListWatch{

ListFunc: func(opts metav1.ListOptions) (runtime.Object, error) {

opts.LabelSelector = MultiClusterSecretLabel + "=true"

return informerClient.Kube().CoreV1().Secrets(namespace).List(context.TODO(), opts)

},

WatchFunc: func(opts metav1.ListOptions) (watch.Interface, error) {

opts.LabelSelector = MultiClusterSecretLabel + "=true"

return informerClient.Kube().CoreV1().Secrets(namespace).Watch(context.TODO(), opts)

},

},

&corev1.Secret{}, 0, cache.Indexers{},

)

controller := &Controller{

namespace: namespace,

configClusterID: clusterID,

configClusterClient: kubeclientset,

cs: newClustersStore(),

informer: secretsInformer,

}

namespaces := kclient.New[*corev1.Namespace](kubeclientset)

controller.DiscoveryNamespacesFilter = filter.NewDiscoveryNamespacesFilter(namespaces, meshWatcher.Mesh().GetDiscoverySelectors())

controller.queue = controllers.NewQueue("multicluster secret",

controllers.WithMaxAttempts(maxRetries),

controllers.WithReconciler(controller.processItem))

// 添加时间处理器,在 secret 变化时,调用 queue.AddObject 加到队列中

// 而 queue.Run 会调用 queue.processItem 从队列获取,并调用 controller.processItem 处理

_, _ = secretsInformer.AddEventHandler(controllers.ObjectHandler(controller.queue.AddObject))

return controller

}

func (c *Controller) Run(stopCh <-chan struct{}) error {

// 为配置的集群运行 handlers,对于当前集群来说,会在 goroutine 外执行 handleAdd 初始化当前集群,将会阻塞其它的 Run/startFuncs 直到这个注册完成

// 因为当前集群是主集群,已经有相关的 kube client 配置了

configCluster := &Cluster{Client: c.configClusterClient, ID: c.configClusterID}

if err := c.handleAdd(configCluster, stopCh); err != nil {

return fmt.Errorf("failed initializing primary cluster %s: %v", c.configClusterID, err)

}

go func() { // 启动多集群远程 secret 控制器

t0 := time.Now()

log.Info("Starting multicluster remote secrets controller")

go c.informer.Run(stopCh)

if !kube.WaitForCacheSync(stopCh, c.informer.HasSynced) {

log.Error("Failed to sync multicluster remote secrets controller cache")

return

}

log.Infof("multicluster remote secrets controller cache synced in %v", time.Since(t0))

c.queue.Run(stopCh)

}()

return nil

}

// 遍历 handlers,调用 ClusterAdded 函数

func (c *Controller) handleAdd(cluster *Cluster, stop <-chan struct{}) error {

var errs *multierror.Error

for _, handler := range c.handlers {

errs = multierror.Append(errs, handler.ClusterAdded(cluster, stop))

}

return errs.ErrorOrNil()

}

|

通过初始化 CredentialsController 处理添加集群后认证相关逻辑:

1

2

3

4

5

6

7

8

|

// pilot/pkg/credentials/kube/multicluster.go

func (m *Multicluster) ClusterAdded(cluster *multicluster.Cluster, _ <-chan struct{}) {

log.Infof("initializing Kubernetes credential reader for cluster %v", cluster.ID)

sc := NewCredentialsController(cluster.Client)

m.m.Lock()

defer m.m.Unlock()

m.addCluster(cluster, sc)

}

|

通过调用 serviceregistry 目录下的 multicluster 控制器的 ClusterAdded 添加集群:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

// pilot/pkg/serviceregistry/kube/controller/multicluster.go

func (m *Multicluster) ClusterAdded(cluster *multicluster.Cluster, clusterStopCh <-chan struct{}) error {

m.m.Lock()

kubeController, kubeRegistry, options, configCluster, err := m.addCluster(cluster)

if err != nil {

m.m.Unlock()

return err

}

m.m.Unlock()

// clusterStopCh is a channel that will be closed when this cluster removed.

return m.initializeCluster(cluster, kubeController, kubeRegistry, *options, configCluster, clusterStopCh)

}

// 添加集群的各种事件处理器

func (m *Multicluster) initializeCluster(cluster *multicluster.Cluster, kubeController *kubeController, kubeRegistry *Controller,

options Options, configCluster bool, clusterStopCh <-chan struct{},

) error {

client := cluster.Client

if m.serviceEntryController != nil && features.EnableServiceEntrySelectPods {

// Add an instance handler in the kubernetes registry to notify service entry store about pod events

kubeRegistry.AppendWorkloadHandler(m.serviceEntryController.WorkloadInstanceHandler)

}

if m.configController != nil && features.EnableAmbientControllers {

m.configController.RegisterEventHandler(gvk.AuthorizationPolicy, kubeRegistry.AuthorizationPolicyHandler)

}

if configCluster && m.serviceEntryController != nil && features.EnableEnhancedResourceScoping {

kubeRegistry.AppendNamespaceDiscoveryHandlers(m.serviceEntryController.NamespaceDiscoveryHandler)

}

//...

// run after WorkloadHandler is added

m.opts.MeshServiceController.AddRegistryAndRun(kubeRegistry, clusterStopCh)

shouldLead := m.checkShouldLead(client, options.SystemNamespace)

log.Infof("should join leader-election for cluster %s: %t", cluster.ID, shouldLead)

//...

return nil

}

|

远程集群初始化分析

首先进入 secretcontroller 的 processItem 函数,然后调用 addSecret 函数,其中会调用 createRemoteCluster 创建远程集群:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

// pkg/kube/multicluster/secretcontroller.go

func (c *Controller) processItem(key types.NamespacedName) error {

log.Infof("processing secret event for secret %s", key)

obj, exists, err := c.informer.GetIndexer().GetByKey(key.String())

if err != nil {

return fmt.Errorf("error fetching object %s: %v", key, err)

}

if exists {

log.Debugf("secret %s exists in informer cache, processing it", key)

if err := c.addSecret(key, obj.(*corev1.Secret)); err != nil {

return fmt.Errorf("error adding secret %s: %v", key, err)

}

} else {

log.Debugf("secret %s does not exist in informer cache, deleting it", key)

c.deleteSecret(key.String())

}

remoteClusters.Record(float64(c.cs.Len()))

return nil

}

func (c *Controller) addSecret(name types.NamespacedName, s *corev1.Secret) error {

secretKey := name.String()

// 之前添加过,先删除

existingClusters := c.cs.GetExistingClustersFor(secretKey)

for _, existingCluster := range existingClusters {

if _, ok := s.Data[string(existingCluster.ID)]; !ok {

c.deleteCluster(secretKey, existingCluster)

}

}

var errs *multierror.Error

for clusterID, kubeConfig := range s.Data {

logger := log.WithLabels("cluster", clusterID, "secret", secretKey)

// 集群名称必须跟当前不一样

if cluster.ID(clusterID) == c.configClusterID {

logger.Infof("ignoring cluster as it would overwrite the config cluster")

continue

}

action, callback := "Adding", c.handleAdd

// 之前有同名 secret 配置过,判断是否有更新

if prev := c.cs.Get(secretKey, cluster.ID(clusterID)); prev != nil {

action, callback = "Updating", c.handleUpdate

// clusterID must be unique even across multiple secrets

kubeConfigSha := sha256.Sum256(kubeConfig)

if bytes.Equal(kubeConfigSha[:], prev.kubeConfigSha[:]) {

logger.Infof("skipping update (kubeconfig are identical)")

continue

}

// 有更新,则把之前的集群停掉

prev.Stop()

} else if c.cs.Contains(cluster.ID(clusterID)) { // 集群名称必须全局唯一,如果有重复名称,则忽略

logger.Warnf("cluster has already been registered")

continue

}

logger.Infof("%s cluster", action)

remoteCluster, err := c.createRemoteCluster(kubeConfig, clusterID)

if err != nil {

logger.Errorf("%s cluster: create remote cluster failed: %v", action, err)

errs = multierror.Append(errs, err)

continue

}

// 调用 handleAdd 或 handleUpdate 添加集群

callback(remoteCluster, remoteCluster.stop)

logger.Infof("finished callback for cluster and starting to sync")

c.cs.Store(secretKey, remoteCluster.ID, remoteCluster)

go remoteCluster.Run()

}

log.Infof("Number of remote clusters: %d", c.cs.Len())

return errs.ErrorOrNil()

}

|

执行到 handleAdd 后,后续逻辑共用当前集群的逻辑,即监听集群事件变更并调用

东西向网关获取分析

在安装 istio 时,同时配置了名为“istio-eastwestgateway”的 ingressgateway 和名为“istio-ingressgateway”的 ingressgateway,那在程序中是如何区分哪一个是东西向网关呢?

这里从获取网关配置源码入手进行分析。

查看调用 NotifyGatewayHandlers 函数的地方有:

- pilot/pkg/model/network.go:refreshAndNotify,gateway 的 dns 改变时

- pilot/pkg/serviceregistry/aggregate/controller.go:addRegistry,增加注册中心时

- pilot/pkg/serviceregistry/kube/controller/controller.go:deleteService,删除的服务是 gateway 时

- pilot/pkg/serviceregistry/kube/controller/network.go:extractGatewaysFromService,如果此服务有更新时

- pilot/pkg/serviceregistry/kube/controller/network.go:reloadNetworkGateways,遍历所有服务,至少有一个 gateway 有更新时

- pilot/pkg/serviceregistry/memory/discovery.go:AddGateways,增加了 gateway 时

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

|

// pilot/pkg/model/network.go

// 初始化,合并 MeshNetworks 和 ServiceRegistries 获取的网关

func NewNetworkManager(env *Environment, xdsUpdater XDSUpdater) (*NetworkManager, error) {

nameCache, err := newNetworkGatewayNameCache()

if err != nil {

return nil, err

}

mgr := &NetworkManager{env: env, NameCache: nameCache, xdsUpdater: xdsUpdater}

// 即 env.NetworksWatcher.AddNetworksHandler 环境配置中 meshNetworks 更改后调用

env.AddNetworksHandler(mgr.reloadGateways)

// 添加处理器,会在 NotifyGatewayHandlers 中调用 mgr.reloadGateways

env.AppendNetworkGatewayHandler(mgr.reloadGateways)

// 添加处理器,会在 NotifyGatewayHandlers 中调用 mgr.reloadGateways

nameCache.AppendNetworkGatewayHandler(mgr.reloadGateways)

mgr.reload()

return mgr, nil

}

// NotifyGatewayHandlers 调用

func (mgr *NetworkManager) reloadGateways() {

mgr.mu.Lock()

oldGateways := make(NetworkGatewaySet)

for _, gateway := range mgr.allGateways() {

oldGateways.Add(gateway)

}

changed := !mgr.reload().Equals(oldGateways)

mgr.mu.Unlock()

if changed && mgr.xdsUpdater != nil {

log.Infof("gateways changed, triggering push")

mgr.xdsUpdater.ConfigUpdate(&PushRequest{Full: true, Reason: []TriggerReason{NetworksTrigger}})

}

}

// 重新加载网络网关,从 MeshNetworks 和 ServiceRegistries 获取

func (mgr *NetworkManager) reload() NetworkGatewaySet {

log.Infof("reloading network gateways")

gatewaySet := make(NetworkGatewaySet)

// NetworksWatcher 初始化为代码 ”multiWatcher := kubemesh.NewConfigMapWatcher(s.kubeClient, args.Namespace, configMapName, configMapKey, multiWatch, s.internalStop)“

// 首先,从静态的网格网络配置获取,是从全局配置字段“meshNetworks”中获取的,这里没配置为 "{}"

meshNetworks := mgr.env.NetworksWatcher.Networks()

if meshNetworks != nil {

for nw, networkConf := range meshNetworks.Networks {

for _, gw := range networkConf.Gateways {

if gw.GetAddress() == "" {

continue

}

gatewaySet[NetworkGateway{

Cluster: "", /* TODO(nmittler): Add Cluster to the API */

Network: network.ID(nw),

Addr: gw.GetAddress(),

Port: gw.Port,

}] = struct{}{}

}

}

}

// 其次,从指定的注册中心获取跨网络的 gateways,调用的 ServiceDiscovery.NetworkGateways 函数

for _, gw := range mgr.env.NetworkGateways() {

gatewaySet[gw] = struct{}{}

}

mgr.multiNetworkEnabled = len(gatewaySet) > 0

// gateway 域名解析

mgr.resolveHostnameGateways(gatewaySet)

// 存储到两个 map:network 和 network+cluster.

byNetwork := make(map[network.ID][]NetworkGateway)

byNetworkAndCluster := make(map[networkAndCluster][]NetworkGateway)

for gw := range gatewaySet {

byNetwork[gw.Network] = append(byNetwork[gw.Network], gw)

nc := networkAndClusterForGateway(&gw)

byNetworkAndCluster[nc] = append(byNetworkAndCluster[nc], gw)

}

//...

mgr.lcm = uint32(lcmVal)

mgr.byNetwork = byNetwork

mgr.byNetworkAndCluster = byNetworkAndCluster

return gatewaySet

}

|

静态网格网络配置字段“meshNetworks”,参考 MeshNetworks,提供网格内部的网络集合信息,和如何路由到每个网络中的端点,示例配置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

MeshNetworks:

networks:

network1: # 网络名

endpoints: # 网络中的端点

# 将指定注册中心的所有端点添加到此网络中,注册中心名称应该与用于配置注册中心 (Kubernetes 多集群)或 MCP 服务器提供的密钥中的 kubecconfig 文件名相对应。(多集群的 secret 配置是”istioctl create-remote-secret“命名创建的 secret???)

- fromRegistry: registry1

- fromCidr: 192.168.100.0/22 # 端点来自指定的网段

gateways: # 网关

- registryServiceName: istio-ingressgateway.istio-system.svc.cluster.local # 全限定域名

port: 15443

locality: us-east-1a # 网关的位置

- address: 192.168.100.1 # ip 地址或者可对外解析的 DNS 地址

port: 15443

locality: us-east-1a # 网关的位置

|

networkendpoint 描述了应该如何推断与端点相关联的网络。端点将根据以下规则分配给网络:

- 隐式方式:如果注册表显式地提供有关端点所属网络的信息。在某些情况下,可以通过向 sidecar 添加 ISTIO_META_NETWORK 环境变量来指示与端点关联的网络。

- 显示方式:通过将服务注册中心名称与网格配置中的“fromRegistry”之一匹配,“from_registry”只能分配给单个网络;或者将 IP 地址与 mesh 配置的网络中的某个 CIDR 范围匹配。CIDR 范围不能重叠,不能分配给单个网络。如果都存在时,显示方式会覆盖隐式方式配置。

在参考 istio 官方多集群部署时,静态网格网络配置字段没有进行设置,因此这里为空。同时参考 istio 多集群部署情况下 MeshNetworks 配置探究,MeshNetworks 是一个遗留配置,只在很少的高级场景会用到。

上面代码从 env.NetworkGateways() 获取跨网络的 gateway,会依次从多个注册中心中调用 NetworkGateways 获取,而注册中心是通过 AddRegistryAndRun 添加的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

// pilot/pkg/serviceregistry/aggregate/controller.go

// NetworkGateways 合并各个注册中心基于服务的跨网络 gateway

func (c *Controller) NetworkGateways() []model.NetworkGateway {

var gws []model.NetworkGateway

for _, r := range c.GetRegistries() {

gws = append(gws, r.NetworkGateways()...)

}

return gws

}

// GetRegistries 返回所有的 registries

func (c *Controller) GetRegistries() []serviceregistry.Instance {

c.storeLock.RLock()

defer c.storeLock.RUnlock()

out := make([]serviceregistry.Instance, len(c.registries))

for i := range c.registries {

out[i] = c.registries[i]

}

return out

}

// 添加注册中心和设置 handler 处理注册中心事件

func (c *Controller) addRegistry(registry serviceregistry.Instance, stop <-chan struct{}) {

c.registries = append(c.registries, ®istryEntry{Instance: registry, stop: stop})

// c.NotifyGatewayHandlers

registry.AppendNetworkGatewayHandler(c.NotifyGatewayHandlers)

registry.AppendServiceHandler(c.handlers.NotifyServiceHandlers)

registry.AppendServiceHandler(func(prev, curr *model.Service, event model.Event) {

for _, handlers := range c.getClusterHandlers() {

handlers.NotifyServiceHandlers(prev, curr, event)

}

})

}

// 添加注册中心,用于当前集群,在 pilot/pkg/bootstrap/servicecontroller.go 的 initServiceController 中调用

func (c *Controller) AddRegistry(registry serviceregistry.Instance) {

c.storeLock.Lock()

defer c.storeLock.Unlock()

c.addRegistry(registry, nil)

}

// 添加注册中心,用于远程集群,在 pilot/pkg/serviceregistry/kube/controller/multicluster.go 的 Multicluster.initializeCluster 调用

// 而 Multicluster.initializeCluster 在同文件中的 Multicluster.ClusterAdded 中调用

func (c *Controller) AddRegistryAndRun(registry serviceregistry.Instance, stop <-chan struct{}) {

if stop == nil {

log.Warnf("nil stop channel passed to AddRegistryAndRun for registry %s/%s", registry.Provider(), registry.Cluster())

}

c.storeLock.Lock()

defer c.storeLock.Unlock()

c.addRegistry(registry, stop)

if c.running {

go registry.Run(stop)

}

}

|

注册中心的 NetworkGateways 逻辑如下,其中 networkGatewaysBySvc 是在 extractGatewaysInner 赋值的。extractGatewaysInner 中调用 getGatewayDetails 函数通过“topology.istio.io/network”标签判断是否为东西向网关:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

|

// pilot/pkg/serviceregistry/kube/controller/network.go

func (c *Controller) NetworkGateways() []model.NetworkGateway {

c.networkManager.RLock()

defer c.networkManager.RUnlock()

if len(c.networkGatewaysBySvc) == 0 {

return nil

}

// Merge all the gateways into a single set to eliminate duplicates.

out := make(model.NetworkGatewaySet)

for _, gateways := range c.networkGatewaysBySvc {

out.AddAll(gateways)

}

return out.ToArray()

}

// 从指定的服务中提取 gateways,并保存到 networkGatewaysBySvc

func (n *networkManager) extractGatewaysInner(svc *model.Service) bool {

n.Lock()

defer n.Unlock()

previousGateways := n.networkGatewaysBySvc[svc.Hostname]

gateways := n.getGatewayDetails(svc)

if len(previousGateways) == 0 && len(gateways) == 0 {

return false

}

newGateways := make(model.NetworkGatewaySet)

// check if we have node port mappings

nodePortMap := make(map[uint32]uint32)

if svc.Attributes.ClusterExternalPorts != nil {

// 此集群的服务端口到 nodePort 的映射

if npm, exists := svc.Attributes.ClusterExternalPorts[n.clusterID]; exists {

nodePortMap = npm

}

}

for _, addr := range svc.Attributes.ClusterExternalAddresses.GetAddressesFor(n.clusterID) {

for _, gw := range gateways {

if nodePort, exists := nodePortMap[gw.Port]; exists {

gw.Port = nodePort

}

gw.Cluster = n.clusterID

gw.Addr = addr

newGateways.Add(gw)

}

}

gatewaysChanged := !newGateways.Equals(previousGateways)

if len(newGateways) > 0 {

n.networkGatewaysBySvc[svc.Hostname] = newGateways

} else {

delete(n.networkGatewaysBySvc, svc.Hostname)

}

return gatewaysChanged

}

// 返回没有填充地址的网关,对给定服务只有网络和(未映射的)端口

func (n *networkManager) getGatewayDetails(svc *model.Service) []model.NetworkGateway {

// 通过“topology.istio.io/network”标签判断是否为网关

if nw := svc.Attributes.Labels[label.TopologyNetwork.Name]; nw != "" {

// 自定义网关端口

if gwPortStr := svc.Attributes.Labels[label.NetworkingGatewayPort.Name]; gwPortStr != "" {

if gwPort, err := strconv.Atoi(gwPortStr); err == nil {

return []model.NetworkGateway{{Port: uint32(gwPort), Network: network.ID(nw)}}

}

}

// 默认端口为 15443

return []model.NetworkGateway{{Port: DefaultNetworkGatewayPort, Network: network.ID(nw)}}

}

// MeshNetworks 静态配置中获取,这里没配置

if gws, ok := n.registryServiceNameGateways[svc.Hostname]; ok {

out := append(make([]model.NetworkGateway, 0, len(gws)), gws...)

return out

}

return nil

}

|

extractGatewaysInner 是在 extractGatewaysFromService 调用,extractGatewaysFromService 在 addOrUpdateService 调用,

而 addOrUpdateService 是在 onServiceEvent 调用,即在初始化远程集群后,获取到远程集群的服务会执行到 onServiceEvent 函数处理 [TODO]:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

|

// pilot/pkg/serviceregistry/kube/controller/network.go

// extractGatewaysFromService 检查服务是否为跨网络的 gateway 且有更新

func (c *Controller) extractGatewaysFromService(svc *model.Service) bool {

changed := c.extractGatewaysInner(svc)

if changed {

c.NotifyGatewayHandlers()

}

return changed

}

// 对于 eastwestgateway 配置了外部 ip,会调用到 extractGatewaysFromService

func (c *Controller) addOrUpdateService(curr *v1.Service, currConv *model.Service, event model.Event, updateEDSCache bool) {

needsFullPush := false

// 处理指定了外部 IP 的 nodePort 网关服务和负载均衡器网关服务

if !currConv.Attributes.ClusterExternalAddresses.IsEmpty() {

needsFullPush = c.extractGatewaysFromService(currConv)

} else if isNodePortGatewayService(curr) { // 使用了节点选择器注解“traffic.istio.io/nodeSelector”

nodeSelector := getNodeSelectorsForService(curr)

c.Lock()

c.nodeSelectorsForServices[currConv.Hostname] = nodeSelector

c.Unlock()

needsFullPush = c.updateServiceNodePortAddresses(currConv)

}

var prevConv *model.Service

// 在新增或者更新服务时需要实例转换

instances := kube.ExternalNameServiceInstances(curr, currConv)

c.Lock()

prevConv = c.servicesMap[currConv.Hostname]

c.servicesMap[currConv.Hostname] = currConv

if len(instances) > 0 {

c.externalNameSvcInstanceMap[currConv.Hostname] = instances

}

c.Unlock()

// 全量推送更新所有端点

if needsFullPush {

// 网络改变了,需要更新所有端点

c.opts.XDSUpdater.ConfigUpdate(&model.PushRequest{Full: true, Reason: []model.TriggerReason{model.NetworksTrigger}})

}

shard := model.ShardKeyFromRegistry(c)

ns := currConv.Attributes.Namespace

// 在服务更改时进行更新,因为服务更改将导致端点更新,但工作负载条目也需要更新

if updateEDSCache || features.EnableK8SServiceSelectWorkloadEntries {

endpoints := c.buildEndpointsForService(currConv, updateEDSCache)

if len(endpoints) > 0 {

c.opts.XDSUpdater.EDSCacheUpdate(shard, string(currConv.Hostname), ns, endpoints)

}

}

c.opts.XDSUpdater.SvcUpdate(shard, string(currConv.Hostname), ns, event)

// 通知上游服务有更新

c.handlers.NotifyServiceHandlers(prevConv, currConv, event)

}

// 处理服务事件

func (c *Controller) onServiceEvent(_, curr *v1.Service, event model.Event) error {

log.Debugf("Handle event %s for service %s in namespace %s", event, curr.Name, curr.Namespace)

// 创建标准的 k8s 服务 (cluster.local)

svcConv := kube.ConvertService(*curr, c.opts.DomainSuffix, c.Cluster())

switch event {

case model.EventDelete:

c.deleteService(svcConv)

default:

c.addOrUpdateService(curr, svcConv, event, false)

}

return nil

}

|

下发远程集群端点分析

在上面只分析了如何获取东西向网关的,那如何在收到远程集群的服务和端点后,如何将远程集群的端点 ip 和 port 改为东西向网关的 ip 和 port 的呢?

首先是每个 pods 代理连接到 istiod 后,会进入到 pushConnection 函数中,然后遍历 xds 类型调用 pushXds 函数生成响应:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

|

// pilot/pkg/xds/xdsgen.go

// 推送给定连接的 XDS 资源。配置将基于传入的生成器生成。

// 根据更新字段,生成器可以选择发送部分响应,如果没有更改甚至不会发送响应

func (s *DiscoveryServer) pushXds(con *Connection, w *model.WatchedResource, req *model.PushRequest) error {

// 获取资源生成器

gen := s.findGenerator(w.TypeUrl, con)

t0 := time.Now()

// 如果设置了 delta,表明客户端正在请求新资源或删除旧资源,只生成它需要的新资源,而不是整个已知资源集。

var logFiltered string

if !req.Delta.IsEmpty() && features.PartialFullPushes &&

!con.proxy.IsProxylessGrpc() {

logFiltered = " filtered:" + strconv.Itoa(len(w.ResourceNames)-len(req.Delta.Subscribed))

w = &model.WatchedResource{

TypeUrl: w.TypeUrl,

ResourceNames: req.Delta.Subscribed.UnsortedList(),

}

}

res, logdata, err := gen.Generate(con.proxy, w, req)

if err != nil || res == nil {

// 如果没有要发送的内容,则报告我们收到了此版本的 ACK。

if s.StatusReporter != nil {

s.StatusReporter.RegisterEvent(con.conID, w.TypeUrl, req.Push.LedgerVersion)

}

if log.DebugEnabled() {

log.Debugf("%s: SKIP%s for node:%s%s", v3.GetShortType(w.TypeUrl), req.PushReason(), con.proxy.ID, info)

}

// If we are sending a request, we must respond or we can get Envoy stuck. Assert we do.

// One exception is if Envoy is simply unsubscribing from some resources, in which case we can skip.

// 如果我们发送了一个请求,我们必须回复,否则我们可能会让 Envoy 卡住。断言我们做到了。

// 一个例外是如果 Envoy 只是取消订阅一些资源,我们可以跳过。

isUnsubscribe := features.PartialFullPushes && !req.Delta.IsEmpty() && req.Delta.Subscribed.IsEmpty()

if features.EnableUnsafeAssertions && err == nil && res == nil && req.IsRequest() && !isUnsubscribe {

log.Fatalf("%s: SKIPPED%s for node:%s%s but expected a response for request", v3.GetShortType(w.TypeUrl), req.PushReason(), con.proxy.ID, info)

}

return err

}

defer func() { recordPushTime(w.TypeUrl, time.Since(t0)) }()

resp := &discovery.DiscoveryResponse{

ControlPlane: ControlPlane(),

TypeUrl: w.TypeUrl,

VersionInfo: req.Push.PushVersion,

Nonce: nonce(req.Push.LedgerVersion),

Resources: model.ResourcesToAny(res),

}

configSize := ResourceSize(res)

configSizeBytes.With(typeTag.Value(w.TypeUrl)).Record(float64(configSize))

if err := con.send(resp); err != nil {

if recordSendError(w.TypeUrl, err) {

log.Warnf("%s: Send failure for node:%s resources:%d size:%s%s: %v",

v3.GetShortType(w.TypeUrl), con.proxy.ID, len(res), util.ByteCount(configSize), info, err)

}

return err

}

return nil

}

|

这里关注的是 eds,查看 EdsGenerator 中的 Generate 函数如何生成响应的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

|

// pilot/pkg/xds/eds.go

func (eds *EdsGenerator) Generate(proxy *model.Proxy, w *model.WatchedResource, req *model.PushRequest) (model.Resources, model.XdsLogDetails, error) {

// 根据更新类型判断是否需要推送 eds

if !edsNeedsPush(req.ConfigsUpdated) {

return nil, model.DefaultXdsLogDetails, nil

}

resources, logDetails := eds.buildEndpoints(proxy, req, w)

return resources, logDetails, nil

}

func (eds *EdsGenerator) buildEndpoints(proxy *model.Proxy,

req *model.PushRequest,

w *model.WatchedResource,

) (model.Resources, model.XdsLogDetails) {

var edsUpdatedServices map[string]struct{}

// canSendPartialFullPushes 确定是否可以发送部分推送(即已知 CLA 的子集)。

// 当只有 Services 发生变化时,这是安全的,因为这意味着只有与关联的 Service 相关的 CLA 发生了变化。

// 请注意,当多网络服务发生更改时,它会触发一个带有 ConfigsUpdated=ALL 的推送,因此在这种情况下,我们不会启用部分推送。

// 尽管此代码存在于 SotW 代码路径上,但仍允许发送这些部分推送

if !req.Full || (features.PartialFullPushes && canSendPartialFullPushes(req)) {

edsUpdatedServices = model.ConfigNamesOfKind(req.ConfigsUpdated, kind.ServiceEntry)

}

var resources model.Resources

empty := 0

cached := 0

regenerated := 0

// 遍历所有监听的资源,对于 eds 来说是集群

for _, clusterName := range w.ResourceNames {

if edsUpdatedServices != nil {

_, _, hostname, _ := model.ParseSubsetKey(clusterName)

if _, ok := edsUpdatedServices[string(hostname)]; !ok {

// 集群没有更新,跳过重新计算。当在某个 Hostname 上获取了增量更新时,就会发生这种情况。

// 在连接或完全推送时,edsUpdatedServices 将为空。

continue

}

}

builder := NewEndpointBuilder(clusterName, proxy, req.Push)

// generate eds from beginning

{

l := eds.Server.generateEndpoints(builder)

if l == nil {

continue

}

regenerated++

if len(l.Endpoints) == 0 {

empty++

}

resource := &discovery.Resource{

Name: l.ClusterName,

Resource: protoconv.MessageToAny(l),

}

resources = append(resources, resource)

eds.Server.Cache.Add(&builder, req, resource)

}

}

return resources, model.XdsLogDetails{

Incremental: len(edsUpdatedServices) != 0,

AdditionalInfo: fmt.Sprintf("empty:%v cached:%v/%v", empty, cached, cached+regenerated),

}

}

func (s *DiscoveryServer) generateEndpoints(b EndpointBuilder) *endpoint.ClusterLoadAssignment {

// 获取集群的端点

localityLbEndpoints, err := s.localityEndpointsForCluster(b)

if err != nil {

return buildEmptyClusterLoadAssignment(b.clusterName)

}

// 应用 水平切分 EDS 的过滤器

localityLbEndpoints = b.EndpointsByNetworkFilter(localityLbEndpoints)

if model.IsDNSSrvSubsetKey(b.clusterName) {

// 对于 SNI-DNAT 集群,我们使用 AUTO_PASSTHROUGH 网关。AUTO_PASSTHROUGH 用于透传 mTLS 请求。

// 但是,在网关上,我们实际上没有任何方法来判断请求是否是有效的 mTLS 请求,因为它是透传 TLS。

// 为了确保只允许流量到 mTLS 端点,过滤掉集群类型为非 mTLS 的端点。

localityLbEndpoints = b.EndpointsWithMTLSFilter(localityLbEndpoints)

}

l := b.createClusterLoadAssignment(localityLbEndpoints)

// 如果启用了地域感知路由,则优先选择端点或设置其 lb 权重。

// 只有在存在异常检测时才启用故障转移,否则 Envoy 将永远不会检测主机的不健康并重定向流量。

enableFailover, lb := getOutlierDetectionAndLoadBalancerSettings(b.DestinationRule(), b.port, b.subsetName)

lbSetting := loadbalancer.GetLocalityLbSetting(b.push.Mesh.GetLocalityLbSetting(), lb.GetLocalityLbSetting())

if lbSetting != nil {

l = util.CloneClusterLoadAssignment(l)

wrappedLocalityLbEndpoints := make([]*loadbalancer.WrappedLocalityLbEndpoints, len(localityLbEndpoints))

for i := range localityLbEndpoints {

wrappedLocalityLbEndpoints[i] = &loadbalancer.WrappedLocalityLbEndpoints{

IstioEndpoints: localityLbEndpoints[i].istioEndpoints,

LocalityLbEndpoints: l.Endpoints[i],

}

}

loadbalancer.ApplyLocalityLBSetting(l, wrappedLocalityLbEndpoints, b.locality, b.proxy.Labels, lbSetting, enableFailover)

}

return l

}

|

在函数 EndpointsByNetworkFilter 中会根据当前 sidecar 来处理服务端点,如果是获取的端点是远程网络的,则会将端点所在网络和集群的网关保存到 gatewayWeights 中,

将网关生成为端点添加到结果中:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

|

// pilot/pkg/xds/ep_filters.go

// EndpointsByNetworkFilter 是一个用来水平切分 EDS 的网络过滤函数,基于连接的 sidecar 网络来筛选端点。

// 过滤器将过滤掉 sidecar 网络中不存在的所有端点,并且添加一个远程网络的网关端点(如果网关存在并且其 IP 是 IP 而不是 dns 名称)

func (b *EndpointBuilder) EndpointsByNetworkFilter(endpoints []*LocalityEndpoints) []*LocalityEndpoints {

// 是否开启多网络,即是否获取到东西向网关

if !b.push.NetworkManager().IsMultiNetworkEnabled() {

return endpoints

}

filtered := make([]*LocalityEndpoints, 0)

// 通过每个网络和每个集群的网关的最小公倍数来缩放所有权重

// 这能够更容易地将流量分散到多个网络网关的端点上,从而提高端点的可靠性。

scaleFactor := b.push.NetworkManager().GetLBWeightScaleFactor()

// 遍历所有端点,将与当前 sidecar 网络相同的端点添加到结果中

// 同时计算每个远程网络的端点数,以便将其用作网关端点的权重

for _, ep := range endpoints {

lbEndpoints := &LocalityEndpoints{

llbEndpoints: endpoint.LocalityLbEndpoints{

Locality: ep.llbEndpoints.Locality,

Priority: ep.llbEndpoints.Priority,

},

}

// 用于跟踪使用的网关及其聚合权重

gatewayWeights := make(map[model.NetworkGateway]uint32)

for i, lbEp := range ep.llbEndpoints.LbEndpoints {

istioEndpoint := ep.istioEndpoints[i]

// 代理不能查看此端点的网络,则排除它

if !b.proxyView.IsVisible(istioEndpoint) {

continue

}

// 通过复制端点来扩展负载均衡权重

weight := b.scaleEndpointLBWeight(lbEp, scaleFactor)

if lbEp.GetLoadBalancingWeight().GetValue() != weight {

lbEp = proto.Clone(lbEp).(*endpoint.LbEndpoint)

lbEp.LoadBalancingWeight = &wrappers.UInt32Value{

Value: weight,

}

}

epNetwork := istioEndpoint.Network

epCluster := istioEndpoint.Locality.ClusterID

gateways := b.selectNetworkGateways(epNetwork, epCluster)

// 如果端点在本地网络上或者可以直接从本地网络到达的远程网络上,则认为它是直接可达的

if b.proxy.InNetwork(epNetwork) || len(gateways) == 0 {

lbEndpoints.append(ep.istioEndpoints[i], lbEp)

continue

}

// 如果跨网络的网关不支持 mTLS,则排除它

if b.mtlsChecker.isMtlsDisabled(lbEp) {

continue

}

// 将权重和网关保存到 gatewayWeights

splitWeightAmongGateways(weight, gateways, gatewayWeights)

}

// 按顺序排列网关,以便生成的端点是确定性的

gateways := make([]model.NetworkGateway, 0, len(gatewayWeights))

for gw := range gatewayWeights {

gateways = append(gateways, gw)

}

gateways = model.SortGateways(gateways)

// 为网关创建端点

for _, gw := range gateways {

epWeight := gatewayWeights[gw]

if epWeight == 0 {

log.Warnf("gateway weight must be greater than 0, scaleFactor is %d", scaleFactor)

epWeight = 1

}

epAddr := util.BuildAddress(gw.Addr, gw.Port)

// 生成一个假的 IstioEndpoint 来携带网络和集群信息

gwIstioEp := &model.IstioEndpoint{

Network: gw.Network,

Locality: model.Locality{

ClusterID: gw.Cluster,

},

Labels: labelutil.AugmentLabels(nil, gw.Cluster, "", "", gw.Network),

}

// 生成网关的 EDS 端点

gwEp := &endpoint.LbEndpoint{

HostIdentifier: &endpoint.LbEndpoint_Endpoint{

Endpoint: &endpoint.Endpoint{

Address: epAddr,

},

},

LoadBalancingWeight: &wrappers.UInt32Value{

Value: epWeight,

},

Metadata: &core.Metadata{},

}

util.AppendLbEndpointMetadata(&model.EndpointMetadata{

Network: gw.Network,

TLSMode: model.IstioMutualTLSModeLabel,

ClusterID: b.clusterID,

Labels: labels.Instance{},

}, gwEp.Metadata)

lbEndpoints.append(gwIstioEp, gwEp)

}

// 调整权重,使其等于端点的总权重

lbEndpoints.refreshWeight()

filtered = append(filtered, lbEndpoints)

}

return filtered

}

// 选择最匹配网络和集群的东西向网关,假如没有网络+集群匹配的网关,则返回匹配网络的所有网关

func (b *EndpointBuilder) selectNetworkGateways(nw network.ID, c cluster.ID) []model.NetworkGateway {

// GatewaysForNetworkAndCluster 里面用到的字段 byNetworkAndCluster 在 NetworkManager.reload 中赋值

gws := b.push.NetworkManager().GatewaysForNetworkAndCluster(nw, c)

if len(gws) == 0 {

// 只匹配网络

gws = b.push.NetworkManager().GatewaysForNetwork(nw)

}

return gws

}

|

综上,当获取到远程集群的服务和端点后,因为不在同一个网络中,会选择对应网络的东西向网关,并将网关生成为端点添加到结果中,然后再下发给 sidecar。

create-remote-secret 源码

针对远程集群操作:将会在远程集群 istio-system 命名空间下创建 istio-reader-service-account 和 istiod-service-account 两个服务账户,以及对这两个账户的 RBAC 相关授权,执行成功后,返回控制集群所需的 Secret。主要逻辑在 createRemoteSecret 中:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

// istioctl/pkg/multicluster/remote_secret.go

func createRemoteSecret(opt RemoteSecretOptions, client kube.CLIClient, env Environment) (*v1.Secret, Warning, error) {

// ... 获取 tokenSecret

tokenSecret, err := getServiceAccountSecret(client, opt)

var remoteSecret *v1.Secret

switch opt.AuthType {

case RemoteSecretAuthTypeBearerToken:

// 创建 remote secret,给其他集群使用

remoteSecret, err = createRemoteSecretFromTokenAndServer(client, tokenSecret, opt.ClusterName, server, secretName)

// ...

default:

err = fmt.Errorf("unsupported authentication type: %v", opt.AuthType)

}

return remoteSecret, warn, nil

}

func getServiceAccountSecret(client kube.CLIClient, opt RemoteSecretOptions) (*v1.Secret, error) {

// 在当前集群的指定命名空间创建 serviceaccount istio-reader-service-account,用--istioNamespace 指定

serviceAccount, err := getOrCreateServiceAccount(client, opt)

// 创建对应的 secret istio-reader-service-account-istio-remote-secret-token。

return getOrCreateServiceAccountSecret(serviceAccount, client, opt)

}

|

查看创建的示例,里面包含 Kubernetes 的 ServiceAccount 的 token 和 ca.crt:

1

2

3

4

5

6

7

8

|

# istio-reader-service-account-istio-remote-secret-token

apiVersion: v1

data:

ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJkakNDQVIyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQWpNU0V3SHdZRFZRUUREQmhyTTNNdGMyVnkKZG1WeUxXTmhRREUyT1RVd01EZzROamt3SGhjTk1qTXdPVEU0TURNME56UTVXaGNOTXpNd09URTFNRE0wTnpRNQpXakFqTVNFd0h3WURWUVFEREJock0zTXRjMlZ5ZG1WeUxXTmhRREUyT1RVd01EZzROamt3V1RBVEJnY3Foa2pPClBRSUJCZ2dxaGtqT1BRTUJCd05DQUFSdFRJdlVPQWpHcHA1MnRIamd4eVFDSk5ZVzNwM204MXVFb0VGUjdPcHUKbEw1Tll5d1hid2VacHo0eGZNcEcraFZqd0dZSldoM1RHRGVlUy9pbFh4OHpvMEl3UURBT0JnTlZIUThCQWY4RQpCQU1DQXFRd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlZIUTRFRmdRVXlMeFp1eDlyTE9LS1JSd1NMNXhXCnRIRHh5MUF3Q2dZSUtvWkl6ajBFQXdJRFJ3QXdSQUlnSi84MllGdXpjZTlqcmdpUkQyL2x5eUpJeEI2ckthN0QKdHJQTjBlZVZuYm9DSUQxTHFEbmpYSTRDQ1FkMEsybUdBVmRaNWlmeXV3cGd6TTNQbTg4djJSYXIKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

namespace: aXN0aW8tc3lzdGVt

token: ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNkltSkZhMVZEYlhWR01qaGtWVE5oYVVoS2JXaHVZMnQzTUdJMVNGaG9kMDlsYW5sak9VcFJTVmh2UkRnaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUpwYzNScGJ5MXplWE4wWlcwaUxDSnJkV0psY201bGRHVnpMbWx2TDNObGNuWnBZMlZoWTJOdmRXNTBMM05sWTNKbGRDNXVZVzFsSWpvaWFYTjBhVzh0Y21WaFpHVnlMWE5sY25acFkyVXRZV05qYjNWdWRDMXBjM1JwYnkxeVpXMXZkR1V0YzJWamNtVjBMWFJ2YTJWdUlpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WlhKMmFXTmxMV0ZqWTI5MWJuUXVibUZ0WlNJNkltbHpkR2x2TFhKbFlXUmxjaTF6WlhKMmFXTmxMV0ZqWTI5MWJuUWlMQ0pyZFdKbGNtNWxkR1Z6TG1sdkwzTmxjblpwWTJWaFkyTnZkVzUwTDNObGNuWnBZMlV0WVdOamIzVnVkQzUxYVdRaU9pSXhOamhrT1RVMlpTMWtOR1l4TFRSbFptVXRZalJpTmkxaVptSTBOVEExTkRJeFpXWWlMQ0p6ZFdJaU9pSnplWE4wWlcwNmMyVnlkbWxqWldGalkyOTFiblE2YVhOMGFXOHRjM2x6ZEdWdE9tbHpkR2x2TFhKbFlXUmxjaTF6WlhKMmFXTmxMV0ZqWTI5MWJuUWlmUS5ITU1BRzR6S0szaGRrcEM3ZHAtSldfWmgzS3dLYzZlcUdEaGdYcks5QlJ1WWNTRlRzRWd6ZTk0c2tlYVFBQWNhNkR2MGUxT1ZjbENiUmNJWl9NVUVhSjc3cmJMQmdoenBNalRQanYya2ZjQlA1aTVoQXRlQlZpcnpmaWRwd2owZjY2eG0yQ2xiQzNXOE9RY2xQcTBHcUgzY0xacWhSc05MMEl3a0xhSHpyX0J1QUc0WENmUXFJU0Z5Uk1HTnF2NnVJODVnbG9fZzBQN3VlY2xUVGhaSDlOWU5uZDd4MnVfaTlMYnZXQml5TUdvbWhEQTZDeEEwcHdQeXVzUk0yQjBoRGNnU1lYOGxLdkMzQnZuWndZQk5Dak4wTUtkQnZrOVBSd09lck1kd3BBVVdNcl9JYm1VdUZfNHliTnlkSHFvU3F0anp5MFpSMFZCbHQwN0NQNFp2cXc=

kind: Secret

type: kubernetes.io/service-account-token

|

最后使用命令istioctl x create-remote-secret --name=cluster2时,创建的内容如下,即由上面的 secret 内容生成的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

apiVersion: v1

kind: Secret

metadata:

annotations:

networking.istio.io/cluster: cluster2

labels:

istio/multiCluster: "true"

name: istio-remote-secret-cluster2

namespace: istio-system

stringData:

cluster2: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJkakNDQVIyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQWpNU0V3SHdZRFZRUUREQmhyTTNNdGMyVnkKZG1WeUxXTmhRREUyT1RVd01EZzROamt3SGhjTk1qTXdPVEU0TURNME56UTVXaGNOTXpNd09URTFNRE0wTnpRNQpXakFqTVNFd0h3WURWUVFEREJock0zTXRjMlZ5ZG1WeUxXTmhRREUyT1RVd01EZzROamt3V1RBVEJnY3Foa2pPClBRSUJCZ2dxaGtqT1BRTUJCd05DQUFSdFRJdlVPQWpHcHA1MnRIamd4eVFDSk5ZVzNwM204MXVFb0VGUjdPcHUKbEw1Tll5d1hid2VacHo0eGZNcEcraFZqd0dZSldoM1RHRGVlUy9pbFh4OHpvMEl3UURBT0JnTlZIUThCQWY4RQpCQU1DQXFRd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlZIUTRFRmdRVXlMeFp1eDlyTE9LS1JSd1NMNXhXCnRIRHh5MUF3Q2dZSUtvWkl6ajBFQXdJRFJ3QXdSQUlnSi84MllGdXpjZTlqcmdpUkQyL2x5eUpJeEI2ckthN0QKdHJQTjBlZVZuYm9DSUQxTHFEbmpYSTRDQ1FkMEsybUdBVmRaNWlmeXV3cGd6TTNQbTg4djJSYXIKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.60.57:6443

name: cluster2

contexts:

- context:

cluster: cluster2

user: cluster2

name: cluster2

current-context: cluster2

kind: Config

preferences: {}

users:

- name: cluster2

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImJFa1VDbXVGMjhkVTNhaUhKbWhuY2t3MGI1SFhod09lanljOUpRSVhvRDgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJpc3Rpby1zeXN0ZW0iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoiaXN0aW8tcmVhZGVyLXNlcnZpY2UtYWNjb3VudC1pc3Rpby1yZW1vdGUtc2VjcmV0LXRva2VuIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImlzdGlvLXJlYWRlci1zZXJ2aWNlLWFjY291bnQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIxNjhkOTU2ZS1kNGYxLTRlZmUtYjRiNi1iZmI0NTA1NDIxZWYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6aXN0aW8tc3lzdGVtOmlzdGlvLXJlYWRlci1zZXJ2aWNlLWFjY291bnQifQ.HMMAG4zKK3hdkpC7dp-JW_Zh3KwKc6eqGDhgXrK9BRuYcSFTsEgze94skeaQAAca6Dv0e1OVclCbRcIZ_MUEaJ77rbLBghzpMjTPjv2kfcBP5i5hAteBVirzfidpwj0f66xm2ClbC3W8OQclPq0GqH3cLZqhRsNL0IwkLaHzr_BuAG4XCfQqISFyRMGNqv6uI85glo_g0P7ueclTThZH9NYNnd7x2u_i9LbvWBiyMGomhDA6CxA0pwPyusRM2B0hDcgSYX8lKvC3BvnZwYBNCjN0MKdBvk9PRwOerMdwpAUWMr_IbmUuF_4ybNydHqoSqtjzy0ZR0VBlt07CP4Zvqw

|

卸载

1

2

|

kubectl delete ns sample

kubectl delete ns istio-system

|

参考