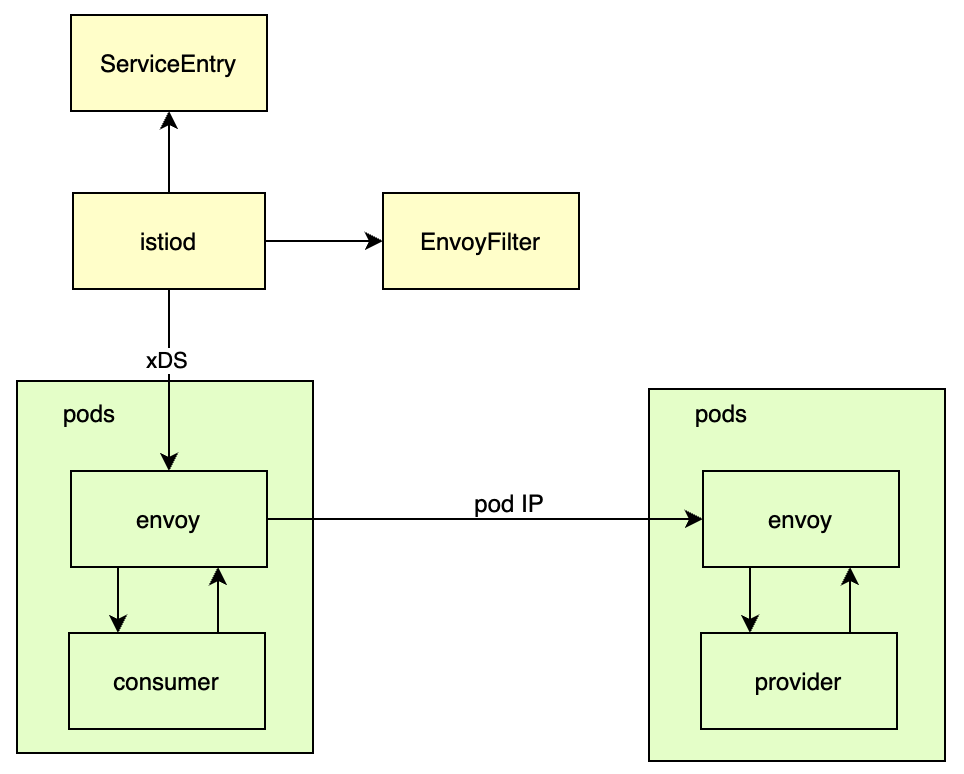

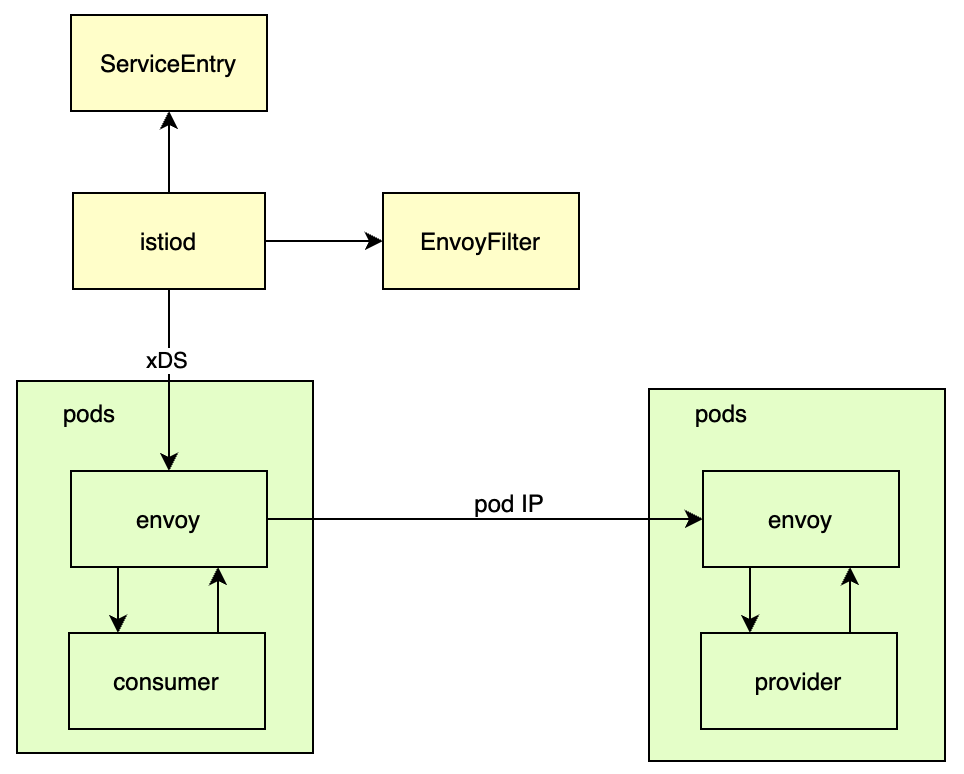

整体流程

这里以 dubbo 官方示例 中的 dubbo-samples-zookeeper 示例代码为基础,实现这样的功能:

- provider 和 consumer 以镜像方式部署在 kubernetes 中,并接入 istio 代理

- 通过 ServiceEntry 来确定提供方,provider 和 consumer 不再需要连接注册中心

- 通过 EnvoyFilter 来支持 Dubbo 协议

实现逻辑流程如下图所示:

istio 不支持 dubbo 协议,因此这种方法缺点是没法使用 istio 的服务治理能力。对比 dubbo 官网的 服务发现流程,差异主要在于: 客户端和服务端不用跟注册中心连接。需要注意:要通过 ServiceEntry 的 host 确定提供方,在 istio 安装时必须开启“ISTIO_META_DNS_CAPTURE”设置。

接入步骤

整个示例,已经汇总放到 github 上 istio_demos。

代码修改

原示例 consumer 中,只有一次调用,这里在调用后增加休眠,使镜像保持运行:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

public class ConsumerBootstrap {

public static void main(String[] args) {

AnnotationConfigApplicationContext context = new AnnotationConfigApplicationContext(ConsumerConfiguration.class);

context.start();

GreetingServiceConsumer greetingServiceConsumer = context.getBean(GreetingServiceConsumer.class);

String hello = greetingServiceConsumer.doSayHello("zookeeper");

System.out.println("result: " + hello);

try {

TimeUnit.DAYS.sleep(1);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

// ...

}

|

镜像制作

需要先将 provider 和 consumer 打包成镜像,首先获取示例代码:

1

2

3

4

|

git clone https://github.com/apache/dubbo-samples.git

cd dubbo-samples/3-extensions/registry/

cp -r dubbo-samples-zookeeper test

cd test

|

修改配置 dubbo-provider.properties 为:

1

2

3

4

5

6

|

# src/main/resources/spring/dubbo-provider.properties

dubbo.application.name=zookeeper-demo-provider

dubbo.registry.address=N/A

dubbo.protocol.name=dubbo

dubbo.protocol.port=20880

dubbo.provider.token=true

|

在这种模式下,consumer 不需要连接注册中心,因此需修改配置 dubbo-consumer.properties 为:

1

2

3

|

# src/main/resources/spring/dubbo-consumer.properties

dubbo.application.name=zookeeper-demo-consumer

dubbo.consumer.timeout=3000

|

consumer 的镜像文件 dockerfile_provider 为:

1

2

3

4

|

FROM openjdk:8

ADD ./target/dubbo-samples-zookeeper-1.0-SNAPSHOT.jar /dubbo-samples-zookeeper-1.0-SNAPSHOT.jar

EXPOSE 20880

ENTRYPOINT java -cp ./dubbo-samples-zookeeper-1.0-SNAPSHOT.jar org.apache.dubbo.samples.ProviderBootstrap

|

因为本地 k3s 集群默认使用的 containerd 运行时,需要用 docker 编译后,保存到本地再用 ctr 命令导入:

1

2

3

4

|

mvn package

docker build -f dockerfile_provider -t "127.0.0.1/provider:v0.0.1" .

docker save "127.0.0.1/provider:v0.0.1" -o provider_v001.tar

ctr -n k8s.io images import provider_v001.tar

|

consumer 启动时,需要直连 provider 的 host,参考 dubbo 直连提供者 进行设置,因此 dockerfile_consumer 为:

1

2

3

|

FROM openjdk:8

ADD ./target/dubbo-samples-zookeeper-1.0-SNAPSHOT.jar /dubbo-samples-zookeeper-1.0-SNAPSHOT.jar

ENTRYPOINT java -Dorg.apache.dubbo.samples.api.GreetingService="dubbo://org.apache.dubbo.samples.api.greetingservice:20880" -cp ./dubbo-samples-zookeeper-1.0-SNAPSHOT.jar org.apache.dubbo.samples.ConsumerBootstrap

|

因为本地 k3s 集群默认使用的 containerd 运行时,需要用 docker 编译后,保存到本地再用 ctr 命令导入:

1

2

3

4

|

mvn package

docker build -f dockerfile_consumer -t "127.0.0.1/consumer:v0.0.1" .

docker save "127.0.0.1/consumer:v0.0.1" -o consumer_v001.tar

ctr -n k8s.io images import consumer_v001.tar

|

ServiceEntry

在 ServiceEntry 里面,设置 provider 中接口的 hosts,指定负载为 provider,这里 addresses 设置为保留网段的任意一个 ip,EnvoyFilter 中会根据此 ip 和端口查找过滤器:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: dubbo-demoservice

namespace: meta-dubbo

annotations:

interface: org.apache.dubbo.samples.api.GreetingService

spec:

addresses:

- 240.240.0.20

hosts:

- org.apache.dubbo.samples.api.greetingservice

ports:

- number: 20880

name: tcp-dubbo

protocol: TCP

workloadSelector:

labels:

app: dubbo-sample-provider

version: v1

resolution: STATIC

|

EnvoyFilter

EnvoyFilter 主要作用是修改 istio 生成的 envoy 配置,这里主要是将 tcp 的过滤器替换为 dubbo 的过滤器:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: outbound-org.apache.dubbo.samples.basic.api.greetingservice-240.240.0.20-20880

namespace: meta-dubbo

spec:

configPatches:

- applyTo: NETWORK_FILTER

match:

listener:

name: "240.240.0.20_20880"

filterChain:

filter:

name: envoy.filters.network.tcp_proxy # 上面设置的是 tcp 协议,因此会生成 tcp 的 filter

patch:

operation: REPLACE # 替换上面的网络过滤器

value:

name: envoy.filters.network.dubbo_proxy # 替换为 dubbo 过滤器

typed_config:

'@type': type.googleapis.com/envoy.extensions.filters.network.dubbo_proxy.v3.DubboProxy

protocol_type: Dubbo

serialization_type: Hessian2

statPrefix: outbound|20880||org.apache.dubbo.samples.api.greetingservice

route_config:

- name: outbound|20880||org.apache.dubbo.samples.api.GreetingService

interface: org.apache.dubbo.samples.api.GreetingService # 接口

routes:

- match:

method:

name:

exact: sayHello # 匹配的方法

route: # 指定 cluster 为由上面 ServiceEntry 生成的 cluster

cluster: outbound|20880||org.apache.dubbo.samples.api.greetingservice

|

部署文件

provider 部署文件 provider.yaml 内容为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-sample-provider

labels:

app: dubbo-sample-provider

spec:

selector:

matchLabels:

app: dubbo-sample-provider

replicas: 1

template:

metadata:

labels:

app: dubbo-sample-provider

version: v1

spec:

containers:

- name: provider

image: 127.0.0.1/provider:v0.0.1

imagePullPolicy: Never

ports:

- containerPort: 20880

|

consumer 部署文件 consumer.yaml 内容为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-sample-consumer

labels:

app: dubbo-sample-consumer

spec:

selector:

matchLabels:

app: dubbo-sample-consumer

replicas: 1

template:

metadata:

annotations:

"sidecar.istio.io/logLevel": debug

labels:

app: dubbo-sample-consumer

version: v1

spec:

containers:

- name: consumer

image: 127.0.0.1/consumer:v0.0.1

imagePullPolicy: Never

|

参考